| Fundamentals of Statistics contains material of various lectures and courses of H. Lohninger on statistics, data analysis and chemometrics......click here for more. |

|

Home  Bivariate Data Bivariate Data  Regression Regression  Derivation of a Univariate Regression Formula Derivation of a Univariate Regression Formula |

|

| See also: regression, Curvilinear Regression, Regression - Confidence Interval, Regression after Linearisation | |

Univariate Regression - Derivation of EquationsThe principle of this derivation is quite simple: the least squares regression curve is one that minimizes the sum of squared differences between the estimated and the actual y values for given x values (residuals). Therefore you first have to define the equation of the sum of squares, calculate the partial derivatives (with respect to each parameter), and equate them to zero. The rest is just plain algebra to obtain an expression for the parameters. Let us conduct this procedure for a particular example: This formula is to be estimated from a series of data points [xi,yi], where the xi are the independent values, and the yi are to be estimated. By substituting the yi values with their estimates axi+bxi2 we obtain the following series of data points: [xi, axi+bxi2]. The actual values of the y values are, however, the yi. Thus the sum of squared errors S for n data points is defined by S = (ax1+bx12-y1)2 + (ax2+bx22-y2)2 + (ax3+bx32-y3)2 + ...... + (axn+bxn2-yn)2 Now we have to calculate the partial derivatives with respect to the parameters a and b, and equate them to zero: dS/da = 0 = 2(ax1+bx12-y1)x1

+

2(ax2+bx22-y2)x2

+ 2(ax3+bx32-y3)x3

+ ...... + 2(axn+bxn2-yn)xn

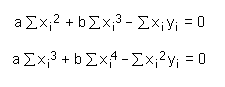

These two equations can easily be reduced by introducing the sums of the individual terms:

Now, solve these equations for the coefficients a and b:

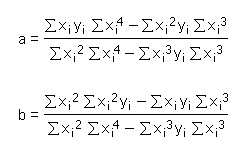

And then substitute the expressions for a and b into their counterparts, with the following final results:

|

|

Home  Bivariate Data Bivariate Data  Regression Regression  Derivation of a Univariate Regression Formula Derivation of a Univariate Regression Formula |

|